Robo-Darwin and the theory of Robo-Evolution

In 1858, Charles Darwin published his seminal book “On the origin of species”. In it, he elaborated the theory of evolution and natural selection, after an expedition to the Galapagos archipelago where he observed birds from different species but the same genus adapting at specific conditions. He was by no means the first one to approach the concept of evolution, but never before somebody went so in depth in analysing the morphological features of different animals, and most of all no one dared imply that us humans were subject to this rule too. But his findings were and still are (to my disbelief) just a theory, conclusions he drew from mere observations of the world around him, he didn’t have the means to generate hard evidence to sustain his claims. Some would say he committed a sin of induction. Recently, the advances in computer science have made testing such theories possible, with the use of simulations. But how would you run these simulations, how do you teach a machine to learn and adapt?

Learning is a tough process. We can summarise it like this: there’s the information input, the process of assimilation and finally, a test to apply the information and verify its assimilation. Put like this it sounds pretty straightforward, but it shouldn’t surprise us if machines have a hard time doing that: they don’t have our same cognitive abilities that we do, yet we managed to create programs that learn to accomplish specific tasks at an increasingly fast pace.

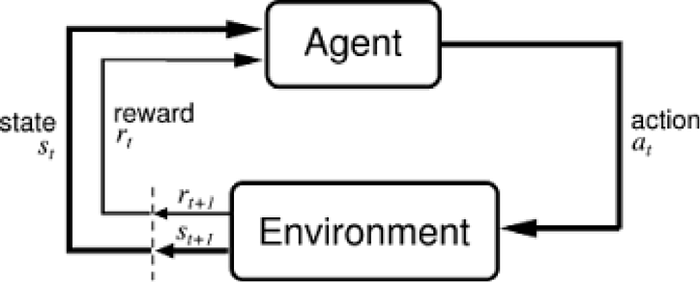

So, how does a machine learn to do tasks? There are many methods of machine learning, but one of them fits the case the best: a method called Reinforcement Learning. A basic RL machine is made like this:

- An agent that takes actions; for example, a drone making a delivery, or Super Mario navigating a video game. The algorithm is the agent.

- An Action (A): A is the set of all possible moves the agent can make. Agents are usually programmed to choose from a list of discrete, possible actions.

- An environment through which the agent moves, and which responds to the agent. If you are the agent, the environment could be the laws of physics that process your actions and determine the consequences of them.

- A state (S), the immediate situation in which the agent finds itself; i.e. a specific place and moment.

- A reward (R) is the feedback by which we measure the success or failure of an agent’s actions in a given state. For example, in a video game, when Mario touches a coin, he wins points.

It’s a very powerful algorithm, however, also quite slow. And by slow, I mean that we can sit for an hour in front of our computer and wonder why our learner does not work at all. If we remain patient, we can see it working, it just learns at a glacial pace.

So, why is this so slow? Well, two reasons. The first one is that the learning happens through incremental parameter adjustment. If a human fails really badly at a task, the human would know that a drastic adjustment to the strategy is necessary, while the reinforcement learner would start applying tiny changes to its behaviour and test again for things to get better. The second reason for it being slow is weak inductive bias. This means that the learner does not contain any information about the problem we have at hand, or in other words, has never seen the task it’s attempting before and has no other previous knowledge about tasks at all.

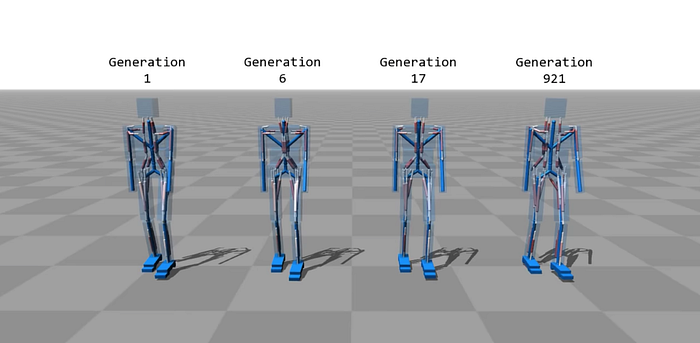

But this can also prove just how remarkable these algorithms are: in a joint experiment conducted between the University of British Columbia and the University of Utrecht, three scientists were able to program a physics simulation of walkers of different body shapes that learned to walk. What’s special about this experiment, is that the simulation not only perfected the gesture of walking itself, but also optimised the muscle routing that enabled it inside the body. What they obtained at the end of the experiment was a set of different bodies capable of moving at different speeds, on different slopes and even capable of adjusting their balance when objects were thrown at them.

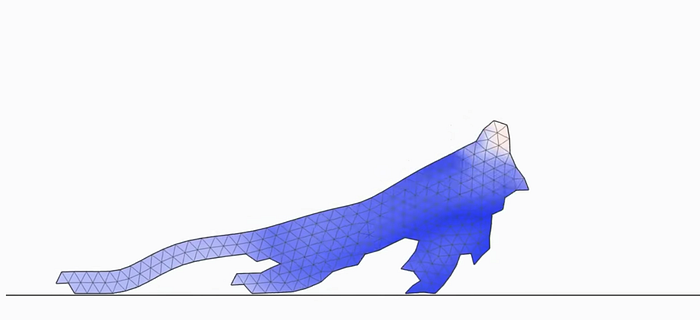

In a different but equally astounding one, a group of researchers at Nagoya University was able to yet again program some walkers, but the approach was completely different: this time, the walker was entirely made of muscle cells with no starting bone structure, and has also to learn to grow the whole body, which is grown from a single cell that through many iterations become hundreds and start taking very familiar shapes. The experiment was made to replicate and study metamorphosis at a cellular level.

But of all the models seen so far, my favourite example comes from OpenAI, a company co-owned by Elon Musk, that created an AI that learns to play hide and seek against itself. The conditions are fairly simple: hiders spawn in an environment with some objects scattered, get some time to hide and when the time is up the seekers spawn and have to find them. Through playthroughs that count in the millions, both the hiders and the seekers learn increasingly effective ways to win the game, to the point of using exploits in the very engine of the game.

Sources:

[https://www.goatstream.com/research/papers/SA2013/] Flexible Muscle-Based Locomotion for Bipedal Creature, by Thomas Geijtenbeek, Michiel van de Panne and Frank van der Stappen

[https://www.mitpressjournals.org/doi/pdf/10.1162/ARTL_a_00207] Artificial Metamorphosis:Evolutionary Design ofTransforming, Soft-Bodied Robots, by Michał Joachimczak, Reiji Suzuki and Takaya Arita